Hey everyone. Sorry for the long delay (I have been working on another writing project, more on that later…). Recently I got a pair of new graphics cards based on Nvidia’s new Turing architecture. This has been advertised as being more efficient than the outgoing Pascal architecture, and is the basis of the popular RTX series Geforce cards (2060, 2070, 2080, etc). It’s time to see how well they do some charitable computing, running the now world-famous disease research distributed computing project Folding@Home.

Since those RTX cards with their ray-tracing cores (which does nothing for Folding) are so expensive, I opted to start testing with two lower-end models: the GeForce GTX 1660 Super and the GeForce GTX 1650.

These are really tiny cards, and should be perfect for some low-power consumption summertime folding. Also, today is the first time I’ve tested anything from Zotac (the 1650). The 1660 super is from EVGA.

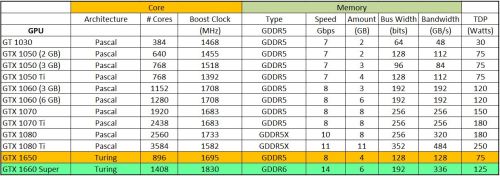

GPU Specifications

Here’s a quick table I threw together comparing these latest Turing-based GTX 16xx series cards to the older Pascal lineup.

It should be immediately apparent that these are very low power cards. The GTX 1650 has a design power of only 75 watts, and doesn’t even need a supplemental PCI-Express power cable. The GTX 1660 Super also has a very low power rating at 125 Watts. Due to their small size and power requirements, these cards are good options for small form factor PCs with non-gaming oriented power supplies.

Test Setup

Testing was done in Windows 10 using Folding@Home Client version 7.5.1. The Nvidia Graphics Card driver version was 445.87. All power measurements were made at the wall (measuring total system power consumption) with my trusty P3 Kill-A-Watt Power Meter. Performance numbers in terms of Points Per Day (PPD) were estimated from the client during individual work units. This is a departure from my normal PPD metric (averaging the time-history results reported by Folding@Home’s servers), but was necessary due to the recent lack of work units caused by the surge in F@H users due to COVID-19.

Note: This will likely be the last test I do with my aging AMD FX-8320e based desktop, since the motherboard only supports PCI Express 2.0. That is not a problem for the cards tested here, but Folding@Home on very fast modern cards (such as the GTX 2080 Ti) shows a modest slowdown if the cards are limited by PCI Express 2.0 x16 (around 10%). Thus, in the next article, expect to see a new benchmark machine!

System Specs:

- CPU: AMD FX-8320e

- Mainboard : Gigabyte GA-880GMA-USB3

- GPU: EVGA 1080 Ti (Reference Design)

- Ram: 16 GB DDR3L (low voltage)

- Power Supply: Seasonic X-650 80+ Gold

- Drives: 1x SSD, 2 x 7200 RPM HDDs, Blu-Ray Burner

- Fans: 1x CPU, 2 x 120 mm intake, 1 x 120 mm exhaust, 1 x 80 mm exhaust

- OS: Win10 64 bit

Goal of the Testing

For those of you who have been following along, you know that the point of this blog is to determine not only which hardware configurations can fight the most cancer (or coronavirus), but to determine how to do the most science with the least amount of electrical power. This is important. Just because we have all these diseases (and computers to combat them with) doesn’t mean we should kill the planet by sucking down untold gigawatts of electricity.

To that end, I will be reporting the following:

Net Worth of Science Performed: Points Per Day (PPD)

System Power Consumption (Watts)

Folding Efficiency (PPD/Watt)

As a side-note, I used MSI afterburner to reduce the GPU Power Limit of the GTX 1660 Super and GTX 1650 to the minimum allowed by the driver / board vendor (in this case, 56% for the 1660 and 50% for the 1650). This is because my previous testing, plus the results of various people in the Folding@Home forums and all over, have shown that by reducing the power cap on the card, you can get an efficiency boost. Let’s see if that holds true for the Turing architecture!

Performance

The following plots show the two new Turing architecture cards relative to everything else I have tested. As can be seen, these little cards punch well above their weight class, with the GTX 1660 Super and GTX 1650 giving the 1070 Ti and 1060 a run for their money. Also, the power throttling applied to the cards did reduce raw PPD, but not by too much.

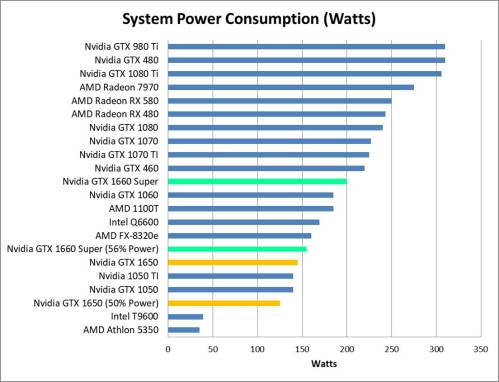

Power Draw

This is the plot where I was most impressed. In the summer, any Folding@Home I do directly competes with the air conditioning. Running big graphics cards, like the 1080 Ti, causes not only my power bill to go crazy due to my computer, but also due to the increased air conditioning required.

Thus, for people in hot climates, extra consideration should be given to the overall power consumption of your Folding@Home computer. With the GTX 1660 running in reduced power mode, I was able to get a total system power consumption of just over 150 watts while still making over 500K PPD! That’s not half bad. On the super low power end, I was able to beat the GTX 1050’s power consumption level…getting my beastly FX-8320e 8-core rig to draw 125 watts total while folding was quite a feat. The best thing was that it still made almost 300K PPD, which is well above last generations small cards.

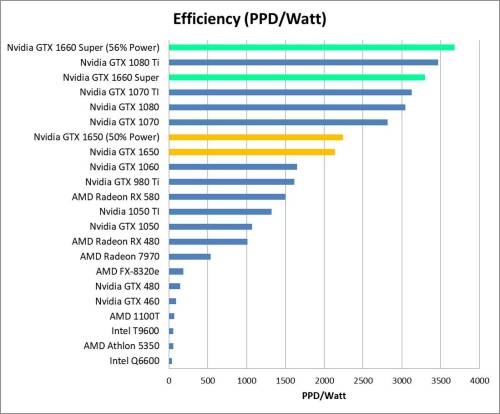

Efficiency

This is my favorite part. How do these low-power Turing cards do on the efficiency scale? This is simply looking at how many PPD you can get per watt of power draw at the wall.

And…wow! Just wow. For about $220 new, you can pick up a GTX 1660 Super and be just as efficient than the previous generation’s top card (GTX 1080 Ti), which still goes for $400-500 used on eBay. Sure the 1660 Super won’t be as good of a gaming card, and it makes only about 2/3’s the PPD as the 1080 Ti, but on an energy efficiency metric it holds its own.

The GTX 1650 did pretty good as well, coming in somewhere towards the middle of the pack. It is still much more efficient than the similar market segment cards of the previous generation (GTX 1050), but it is overall hampered by not being able to return work units as quickly to the scientists, who prioritize fast work with bonus points (Quick Return Bonus).

Conclusion

NVIDIA’s entry-level Turing architecture graphics cards perform very well in Folding@Home, both from a performance and an efficiency standpoint. They offer significant gains relative to legacy cards, and can be a good option for a budget Folding@Home build.

Join My Team!

Interested in fighting COVID-19, Cancer, Alzheimer’s, Parkinson’s, and many other diseases with your computer? Please consider downloading Folding@Home and joining Team Nuclear Wessels (54345). See my tutorial here.

Interested in Buying a GTX 1660 or GTX 1650?

Please consider supporting my blog by using one of the below Amazon affiliate search links to find your next card! It won’t cost you anything extra, but will provide me with a small part of Amazon’s profit so I can keep paying for this site.

Thanks, this is the sort of thing I’m looking for.

I’ve got a Dell T7400, eight Xeon cores, gobs of RAM, no UEFI bios, Linux. It’s been folding away since 26-March. No GPU slot. The machine can support 2 X 225 watt GPUs in PCIe 2.0 slots. Thinking about adding one GPU for now. So we’ll have a 4-core CPU Slot, and a GPU slot, and plenty of power for my own network.

Trying to figure out best bang for buck < $300 for 12 months.

Seems like GTX1660/Super is going to be it — I can pay up to about $225 for it. If I could get them really cheap, I'd run two of them.

That sounds like a good build to me, I’m really liking the 1660 Super

I’ve got a couple questions, if anybody’s got any wisdom.

1) My machine has PCIe 2.0 slots. Will the slower I/O overwhelm any real advantage of the “Super” version vs. the standard 1660?

2) Does it really matter what brand of card I buy?

1) I found that the 1660 Super gets the same points on PCIe 4.0 as it does on 2.0 in Windows 10. I think the slot bandwidth only comes into play with really fast cards, perhaps like the 1080 Ti or 2080 Ti, and even then it’s only something like a 10% hit.

2) Not really, unless you plan on overclocking. The differences in a lot of the 3rd party card vendors comes down to the power handling and cooling on the board, + some minor factory overclocking. I usually go with EVGA, but I have had good luck with basically all of them (Zotac, Gigabyte, Asus, MSI, etc). Sometimes I change brands if there is a deal going on.

Great, thanks. Sounds like I’ll be well-enough balanced. I paid $99 bucks for the server, and did not have folding in mind. But I figure this is a little contribution I can make instead of letting the machine idle.

I’d like to build a low(wish) end pc for folding and managing several 4K realtime video streams. I’ve been folding on 3 systems (MacBook Pro, old win 10 laptop, Synology was) without gpu and want to replace the old win 10 laptop I use for the cameras.

What do you recommend for building a system or is there a site you’d recommend?

Awesome! I’d start with a new, efficient processor like the AMD 3000g (dual core, with only a 35 watt TDP). 8 GB to 16 GB of whatever DDR4 ram happens to be on sale will work fine (folding is not very sensitive to system memory). I like the B450 chipset socket AM4 boards…more energy efficient than the new X570 ones and still have more features than typical budget boards. I’ve had good luck with Asus and ASRock boards lately. For the GPU, I’d say the 1660 Super, or if you can swing it the RTX 2060 super will give you lots of PPD while not sucking down too much power. I like my EVGA 1660 super quite a lot. Grab a good name brand power supply (Seasonic, Antec, Corsair, Thermaltake, BeQuiet, PC Power & Cooling, etc). Stay away from no name power supplies that might come with the case…these are usually inefficient and in my case caused damage to my computer under heavy loads. Right now, I’d say 80+ gold is a good efficiency to price sweet spot for power supply ratings. If you prefer Intel parts, I’d check out the Pentium Gold line, such as the G5400.

Curious why you didn’t test any mobile systems. My Alienware 17 with a 9980HK and an RTX1080 MaxQ (990Mhz) is folding about 1.3M/day @ ~170W just folding on the GPU. I run it with all 8 cores going with Boinc (1/2[HT] for the GPU and 7/14[HT] for Boinc) and it’s about 185W.

I’m not some save the world greenie, but I am a save money guy. I’ve thought about rigging a 300W solar panel array to run this during the day. I already run Boinc (World Community Grid and Rosetta) on a small Android phone that is 100% solar.

I’d test them if I had them…last laptop I tested was my old core2duo Dell Inspiron from 2008! High-end mobile systems like yours sound like a great option…they are tuned for efficiency moreso than desktops. Those are great PPD and wattage numbers. If you aren’t running Boinc, what is the total system wattage while folding? I could throw it up on one of my comparison plots…it’s going to look really good I think. How are the temps on that machine? Lots of people in the folding forums try using laptops and end up having overheating problems

Hey Chris, The weird thing is, the system operates cooler with Boinc running on all cores because it keeps the CPU clock throttled by like 50% to 2GHz. So the wattage is about the same as just running F@H (it bounces betrween 160- 170W) with the CPU and one core at around 4GHz. If I turn off Boinc, F@H bounces between cores as they hit 100C and stop and the system passes F@H to another core, it hits 100C, and on again. That’s hard on the CPU and probably would cause WU errors. So it’s actually easier on the CPU to just run both F@H and Boinc. I haven’t figured out how to manually clock the CPU down when just one core is at 100% to prevent the 100C tmax timeout.

The only time I’ve gotten WU errors is when pausing, or doing other stuff on the laptop that is GPU-related.

But I’m going to say 160W average on the Kill-A-Watt when just running F@H. I’m going to say ~1.2M PPD, but I haven’t tried 24 hours just with F@H because of the tmax issue. Honestly I don’t think running Boinc hurts the Folding performance. I have Boinc set at 87.5% of the cores, which leaves 1 (2 counting hyperthreading) dedicated for F@H.

Alienware ships with a control center and I have it set at the “cool” setting, but it only seems to kick in when all cores are running.

You know, in the early days of the Intel Core, there was a desktop motherboard you could use with mobile CPU’s (I think Socket M?). Like 2006. I built such a rig and was phase changing that beast with great overclocks. Back then I just ran Prime95. I’m years out of my nerdy overclocking days. But I wonder if there is similar mobile-socketed desktop motherboard today? If you could watercool this CPU in a multi-RTX SLI configuration it would be epic in terms of points per watt. I paid too much for this laptop ($4K!) to turn it into a watercooled Frankenstein, haha.

Gotcha. That’s an impressive laptop! Try turning off CPU Turbo mode in the bios (advanced tab I think). It should stop the silly high temps and I don’t think will affect F@h or Boinc performance much, if at all)

Awesome tip on the mobile Mobo…I wonder, if one doesn’t exist…if we could get ahold of a laptop like yours and take the board out and mod it into a desktop case! Would set an efficiency record lol

Dissipating 185W out of a laptop case is pretty impressive! I think most laptops could handle maybe a quater of that. More space makes the cooling solution easier, and cheaper. But it sounds like this works for you.

It’s an Alienware gaming laptop, so it’s supposed to dissipate the GPU heat. Good thing I got that extra 3 year warranty! Wish there were a way to watercool laptops, haha.

You mention water cooling in another reply (that I cannot reply to directly, as it’s second level reply already), but did you try cheap laptop cooling pad with built-in fans? They can do wonders on thermals and would probably make your CPU throttle to much higher clocks giving you even more performance. I would also consider removing battery if it’s easily swappable (not in your case if Alienware 17 R2 is your SKU) to avoid shorting it’s lifespan by keeping it in the heat.

Hey, I have my laptop on a stand with no fans.I read several reviews of those fan pads, but none seemed to actually lower the CPU or GPU temps. Of course, removing heat in general is good even if CPU and CPU temps don’t change for components like the battery as you mentioned.

Pingback: New Folding@Home Benchmark Machine: It’s RYZEN TIME! | Green Folding@Home

My Fury X shows almost 800k PPD on Project 14417, which is very nice considering I bought it during mining craze, when RX 580s and GTX 1060s were hard to find or mightly overpriced and fixes bad aftertaste of unimpressive gaming performance.

That’s cranking! Awesome

After reading your review I got a GTX 1650 for m y low budget Xeon X79 build. I am using solar power exclusively, no power line reaches my house. I use the rig for work, running docker stuff on the 20 cores. It seems this way I can comfortably work on it while lending a hand to science.

p.s. also joined the team.

That’s awesome! And thanks for joining! Sun powered folding is the best (we are grid tied, but we also have panels. They make a huge dent in the summer / usually more than eliminate the monthly bill)

Hey Chris, I might need some help because my Asus Geforce GTX 1650 only folds about 60k PPD in the 16x slot and on the 1x -> 16x USB riser about 50k PPD. You (and other sources) report 400k PPD. Are there any tweaks or settings to get to that number?

Hi! My first thought is that you might not have a passkey set up. You need one of those to get the exponential quick return bonus points. See the link here.

https://foldingathome.org/support/faq/points/#:~:text=So%20in%202010%20we%20introduced,will%20continue%20to%20use%20it.

You can get a passkey here

https://foldingathome.org/support/faq/points/passkey/

Ha, I did not have a passkey yet.

That made it jump to 260k ppd almost immediately. Let’s see what that stabilizes at over the next few days.

Thanks a lot!

Pingback: How to Make a Folding@Home Space Heater (and why would you want to?) | Green Folding@Home

It gets very very hot where I love in summer, so I’ve been tweaking my main computer for power efficiency to reduce heat. Glad I found this site as I run my PC with F@h right now as I’m not gaming as much on my PC.

I found this article https://www.tomshardware.com/features/graphics-card-power-consumption-tested

and it seems that the 1660ti is according to the tests the most power efficient followed by AMD 5700(non-xt). The 1660 super is in 3rd place on their chart. Since the ti is a bit more powerful than the super, why not give that a try if you have the funds.

I have a titanium 80+ PSU and find that it runs MUCH cooler than my old bronze 80+. I’ve got a 5700XT running, and I stopped running it with my Ryzen 3600X. But the 5700XT seems to run nicely just by running it at half power. It is at about 90w on the GPU

Really think this is the best option for me, and I’m not sweating in this summer heat as I don’t run my air in the house until it hits 80, I know, I’m insane. But I find that this is working out. If you are interested I can keep you posted on the 5700XT PPD. Sadly I don’t have a wall meter to test power draw, but I have a 3600X CPU and a x470 MoBo because the x570 boards are set up for PCIe 4.0 and draw extra power for no reason. Required a bios update to use the 3000 series CPU, but it was worth it.

Good info, thanks! The 5700XT is on my list to try this fall, and I recently picked up a 1660ti, which looks to be a bit faster than the 1660 super while using 5 watts less power. So yes, definitely interested in testing that out. It’s taking a while to get through my 3950x testing (too many core settings to test!), but I’ll be back on graphics cards soon I think.

Pingback: AMD Ryzen 9 3950X Folding@Home Review: Part 3: SMT (Hyperthreading) | Green Folding@Home

Hi Chris,

just found your website when I was doing research on the most efficient GPU to run the F@H client. I’m currently running two setups:

a) Core i5-4690K + 8GB RAM + GTX 1070

b) Ryzen 9 3950X + 32 GB ECC RAM+ GTX 2070 Super

75% of the electricity comes from our solar collectors and 25% from a CO²-neutral grid contract.

To be honest, I’m a bit of a competitive person and recently joind a new team where I really want to be #1. 😀

That’s why I’m looking into building another system:

c) Ryzen 7 3800X(T) + 32 GB RAM + 3 x GTX 1660 Super

Right now I’m trying to figure out which case, power supply and mainboard to use. I might go with the Asus ROG STRIX B450-F GAMING, which would allow me to run the 3400G processor and use its embedded GPU for the config screen together with three 1660S dedicated to F@H.

I settled on the GPU because of your test results. Thanks a million!

If you are interested, I’ll keep you posted about my progress. 🙂

Kind regards,

Philipp

Hi Phillip, glad I could help! Those are some nice folding rigs and will really help make a difference. Awesome that your power is so clean…in the summer my rooftop array makes more than enough to cover our usage which is awesome…turning photons onto science is great!

Definitely let me know how that build turns out. I recently picked up a 1660 Ti which should be slightly more efficient than the super (5 watts less power consumption). The real reason I went for the Ti that I have is that I found one with a blower style heatsink, so it will exhaust 100 percent of the hot air out the back of the case. I’m interested in multi card setups too (going to run this with my 1080 Ti in the same case in my 3950x rig). Getting all the heat out in multi-card GPU rigs is a challenge. Thankfully, running then at 50 percent power target to maximize efficiency helps immensely with the heat problem

This morning my wife gave me the go-ahead for this little project.

“Everyone needs a hobby she said …” 😀

I think I’m going to go with the 1660 Ti too. Also I’m going to add a couple of additional high speed fans to the computer case to keep the temperatures low.

The components have been ordered:

– 1 x ASUS PRO WS X570-ACE, Mainboard (it comes with three PCIe-4.0-x16 slots and is made for Workstations)

– 3 x GIGABYTE GeForce GTX 1660 Ti OC 6G

– AMD Ryzen™ 7 3700X, CPU

– 16 GB of RAM (should be enough for running Ubuntu)

– be quiet! DARK BASE 900 with some additional high-speed fans and a 850W PSU

– SAMSUNG 970 EVO 500 GB

Let the fun begin. 🙂

Today I’ve assembled the components and the server is running through its first overnight BurnInTest doing some science. Looks like power consumption has gone up about 500W and I’m still trying to figure out how to setup the client on Ubuntu to let me access it from a Windows based fahcontrol.exe.

SSH works fine though.

Tomorrow I’m going to play a bit with the fan configuration, right now it’s just three front intake fans and one outtake fan at the rear. And there’s a fan blowing cold air on two of the GPUs. I’d like to see whether the GPU temperatures change when I let this fan run in reverse to support the GPU fans to blow away the hot air.

Also I’m going to have a look at side panel fans blowing in cold air.

Awesome, that’s exciting! For my Ubuntu clients, I ended up just using Teamviewer as a standalone application to access the machine remotely from my windows PC and android phones. Way back in the day I had the clients configured to talk to my Windows PC, but I can’t quite remember how well that worked.

Side panel intake fans made a huge difference for me on my quad GPU space heater build. I now run at least one 120mm side panel intake pointed at the cards for my dual GPU setups. In two cases that didn’t support side panel fans, I ended up cutting the panels with my Dremel tool.

The folding-machine is running headless now. I got my SSH access, which should be sufficient to monitor performance and temperatures. 🙂 FAHControl doesn’t work with Ubuntu 20.04 LTS anyway because Gtk2 and python2 are no longer developed nor supported by 20.04 LTS.

I have experimented with different setups of fans. In my case, the basic setup works best:

– one Corsair ML140 with 2.000 RPM at the upper back, blowing the hot air out of the case

– three Corsair ML140 with 2.000 RPM blowing cold air in from the front.

The CPU (3700X) usually peaks at 77 °C (171 °F) and is fully committed to folding.

My three GPUs (1660 Ti) show very different temperatures:

– The first has enough space and usually runs at 67 °C (153 °F) and 71% Fan speed. It usually maxes out the power limit.

The second and third are cramped together without any space in-between. This is not ideal and I have therefore tried to bring their temperatures down a bit more.

– The second GPU runs at 70 °C (158 °F) at 76% Fan Speed and gets throttled to about 100W of 120W

– The third GPU runs at 82 °C (180 °F) at almost 100% Fan Speed and has an enforced power limit of 80W.

Max temperature specification for the GPU is 95 °C (203 °F).

As mentioned before I’ve tried to lower the GPU temperatures by using different fan setups.

With the ASUS PRO WS X570-ACE Mainboard comes with a two-way VGA holder which can be mounted with an additional system fan (up to 120mm). This VGA holder turned out to be pretty much useless in my case as there was no cooling effect from an additional fan.

Setup a-1) VGA holder with blow on fan

– GPU 1: 76 °C

– GPU 2: 83 °C

– GPU 3: 87 °C

Setup a-2) VGA holder with blow on fan and blow in side panel fan

– GPU 1: 71 °C

– GPU 2: 76 °C

– GPU 3: 86 °C

Setup b-1) VGA holder with blow off fan

– GPU 1: 71 °C

– GPU 2: 76 °C

– GPU 3: 86 °C

Setup b-2) VGA holder with blow off fan and blow in side panel fan

– GPU 1: 67 °C

– GPU 2: 72 °C

– GPU 3: 87 °C

Setup c) no VGA holder, no side panel fan, just standard

– GPU 1: 67 °C

– GPU 2: 70 °C

– GPU 3: 87 °C

I therefore settled with the standard fan setup of three intake fans and one outtake fan.

In order to bring down the temperature of the third GPU I manually enforced a power limit to 80W.

Final Setup: no VGA holder, no side panel fan, three in, one out, 80W power limit for GPU 3

– GPU 1: 67 °C

– GPU 2: 70 °C

– GPU 3: 82 °C

Overall power consumption is at 445W and it looks like the machine produces about 3,000,000 credits every day. I’m still trying to figure out how to connect my windows based fahcontrol.exe to the new command line client, but haven’t had any luck so far.

Just hand an idea, I’m going to swap the third 1660 Ti against an older GTX 1060, which is longer, higher and narrower. This should improve overall cooling and efficiency. 🙂

That might work. Did you get blower style 1660 tis or open fan cards?

Unfortunately with open fans as I didn’t find any blower style cards here in Germany. some of the RTX 4000 (+) series come with 1 slot and blower style design, at a much higher price.

I might experiment with the VGA holder again to see whether the new setup with a little more space between the cards makes a difference.:)

I tried, but it didn’t work, because the old GTX 1060 is kind of oversized and didn’t leave any room at all to its neighbour.

But leaving the three 1660 Ti in there, running 24/7 wasn’t an option either. Even with the new power limit, the temperature was still way too high

for continuous operation. I have therefore removed the third GPU and used it to replace my old GTX 1060 in my children’s computer.

So, choosing three GPUs was probably a mistake and I should have instead bought two GTX 2060 Super. Since learning was a big motivation for doing this project, I’m ok with the outcome. 😀

It might be only about 2,500,000 credits per day.

I have since experimented with a couple of other cooling fan setups.

Anything that disturbs the airflow from front to back causes the temperature of either the CPU or the GPUs to increase.

In short, there was no benefit from installing additional large fans on the top, blowing in or out, nor was there a benefit from an additional fan blowing in from the bottom.

Next stage of my experiments is the addition of three 4cm x 4cm x 2cm Noctua NF-A4x20 cooling fans to the back bezel right behind the GPUs, in order to support the air flow from front to back.

After all, I might swap my 2070 Super against a 3080 for Christmas. 😉

Update on using the three mini fans. Both GPUs are running 3 degrees hotter, even though they are pulling out a lot of hot air.. This is truly fascinating! I’m going to turn them around now to push cold air in, just for the fun of trying this. 😀

Fun experiment. I tried those little fans way back in the day too on my overheating rig that was running a 1080 Ti and a 980 Ti, couldn’t really see a difference either way. The thing that solved it was cutting a hole in the side panel and mounting an external 120mm fan blowing cold air right at the GPUs

Final setup is complete. And I’m very surprised about one some new findings:

a) The GTX 1660 Tis are hot heads

b) My new RTX 2060 is much more efficient than the 1660 Ti

The setup looks as follows:

– Ryzen 3700X ~ 180,000 PPD

– GeForce GTX 1660 Ti ~ 650,000 PPD at 100W Power Limit (120 W) (69° C)

– GeForce RTX 2060 ~ 1,200,000 PPD at 150W of the 160W Default-Power-Limit (71° C)

– GeForce GTX 1660 Ti ~ 650,000 PPD at 100W power Limit (120 W) (83° C)

On my Win 10 workstation the RTX 2070 Super produces about 1,800,000 PPD at 193W of the 215W Default-Power-Limit (68° C)

The mainboard, an ASUS PRO WS X570-ACE, broke, I guess it overheated. After all, the X570 chipset is known as a hothead … the symptoms were erratic boot-up behaviour after restarts or standby mode. Sometimes the system did not even show the BIOS boot screen. I’ve exchanged it against a Gigabyte B550 Aorus Elite V2 and everything is working fine now. Unfortunately this mainboard can only fit two GPUs.

The CPU (3700X) with a new be quiet! shadow rock 3 peaks now at 70 °C (158 °F), that’s about 10% less than with the boxed AMD cooler which I previously used. GPU temperatures remain pretty much the same. A big factor for them is the Power Limit chosen.

Hi Chris, I am trying to subscribe to your blog (searched Green Folding@home and variations)

but it does not seem to be listed on WordPress, but i’m an Old nooby, so let me know what I am doing wrong.

I bought an EVGA GTX 1650 Super SC before I’d ever heard of Folding@Home, but have been running it 24×7 for months. I recently started working on over-clocking my GPU, and am folding at 835-845K PPD. At the default settings, the GPU was running at about 500-550 PPD. I used the following article and stayed below the maximums it lists for over-clocking:

https://www.techpowerup.com/review/evga-geforce-gtx-1650-super-sc-ultra/33.html

For over-clocking, I used the EVGA Precision X1 application, but most folks seem to prefer MSI’s Afterburner.